Getting Started

So I’ll confess, I’m pretty late to the party here. ChatGPT and other generative AI models have taken the popular conciousness by storm while I’ve been sitting around trying to ignore the hype. I’ve seen previous advancements in generative audio and imagery models and shrugged at the relative lack of societally beneficial applications that surfaced. I’ve even been uncomfortable using the term ‘artificial intelligence’ to refer to any system – you’ll notice it doesn’t appear in any of my content – but that may be starting to change.

I am here to admit that I am pleasantly surprised at the performance and utility of the current state-of-the-art generative language models. Are there potentially huge dangers in terms of bias, propoganda, and uneven distribution of benefits? Yes, of course. (If you’d like more discussion about the risks I see, jump to the end of this post.) However, just because a tool is dangerous doesn’t mean we shouldn’t learn to use it carefully and make it better.

I’ve seen loads of ChatGPT-generated text on social media lately, but what changed my mind was the ability to interact with ChatGPT around code and related concepts. Perhaps if I had tried GitHub Copilot sooner, I wouldn’t be acting as taken aback right now. In any case, I wanted to provide an example of runnable code and its explanation in order to (1) justify my fanboyism, and (2) because I haven’t seen examples of complex code being generated in my social media feeds.

So, without further ado, here is the unedited reponse to a prompt that I gave ChatGPT to write an article with working code about a specific type of topic modeling called non-negative matrix factorization, or NMF, to be used on a corpus of paper abstracts from arxiv.org. I gave it specific instructions on which python libraries to use and how to visualize the results.

Begin ChatGPT

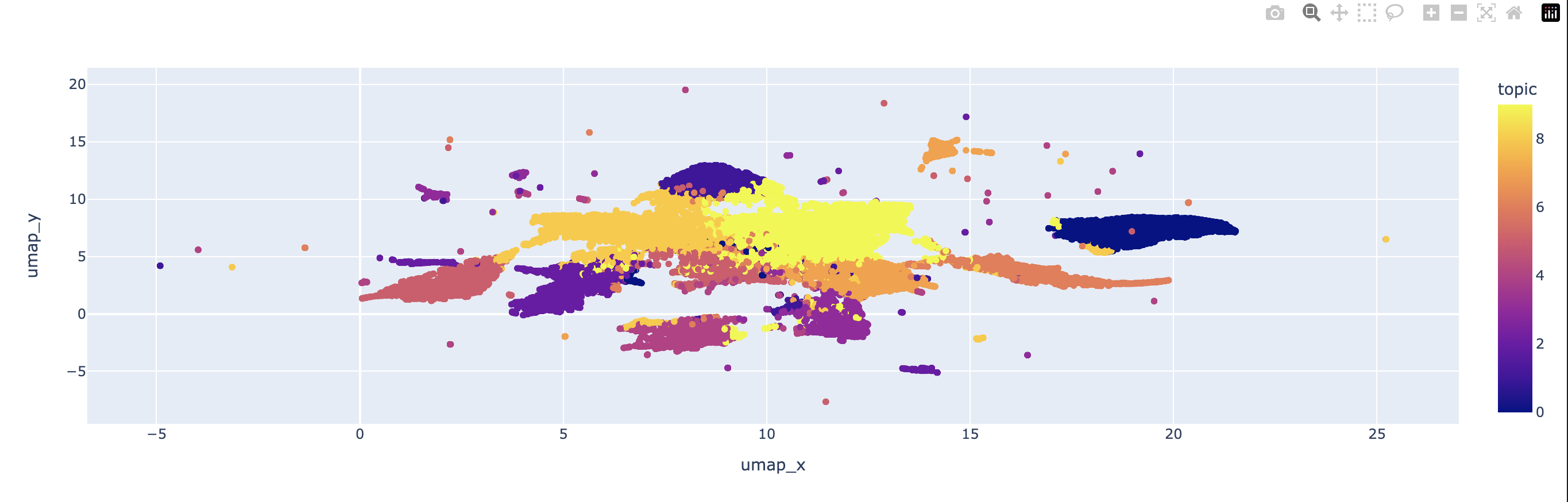

In this article, we’ll discuss how to analyze a corpus of academic articles using topic modeling and visualize the results as a scatter plot. Specifically, we’ll use Python to read in a CSV file containing article titles and summaries, preprocess the text, and perform topic modeling using Non-negative Matrix Factorization (NMF). Finally, we’ll use UMAP to position the articles in a scatter plot with colors representing topics.

To start, we need to import the necessary Python libraries:

import pandas as pd

import numpy as np

import re

import string

from sklearn.feature_extraction.text import TfidfVectorizer

from sklearn.decomposition import NMF

import umap

import plotly.express as px

Next, we’ll read in the CSV file and concatenate the title and summary fields into a single text field:

df = pd.read_csv('articles.csv')

df['text'] = df['title'] + ' ' + df['summary']

We’ll then preprocess the text by converting all text to lowercase, removing punctuation and digits, and removing stopwords. We’ll use the NLTK library for this:

import nltk

nltk.download('stopwords')

from nltk.corpus import stopwords

stop_words = set(stopwords.words('english'))

def preprocess_text(text):

text = text.lower()

text = re.sub(r'\d+', '', text)

text = text.translate(str.maketrans('', '', string.punctuation))

text = ' '.join([word for word in text.split() if word not in stop_words])

return text

df['processed_text'] = df['text'].apply(preprocess_text)

Now, we’ll create a TF-IDF vectorizer and use it to transform the preprocessed text into a matrix of TF-IDF features:

tfidf_vectorizer = TfidfVectorizer(max_df=0.95, min_df=2, max_features=1000, stop_words='english')

tfidf = tfidf_vectorizer.fit_transform(df['processed_text'])

We’ll then use NMF to perform topic modeling with 10 topics:

nmf_model = NMF(n_components=10)

nmf_features = nmf_model.fit_transform(tfidf)

Finally, we’ll use UMAP to reduce the dimensionality of the NMF features and plot the articles as a scatter plot with colors representing topics:

umap_model = umap.UMAP(n_neighbors=15, n_components=2, min_dist=0.1, metric='cosine')

umap_embeddings = umap_model.fit_transform(nmf_features)

topics = np.argmax(nmf_features, axis=1)

fig = px.scatter(x=umap_embeddings[:,0], y=umap_embeddings[:,1], color=topics)

fig.show()

In this scatter plot, each dot represents an article, and the colors represent the topic assigned by NMF. We can use this plot to explore the topics and their relationships to each other.

In conclusion, we’ve shown how to perform topic modeling on a corpus of academic articles using NMF and visualize the results as a scatter plot with UMAP. This analysis can be useful for exploring the topics and relationships within a corpus of text data.

End ChatGPT

My two cents

Now, if you’re like me, you’ll want to explore the plot that was generated. I’ve hosted it here for easy interaction. Back to the main point, though.

I’m pretty impressed by how well today’s generative models can write and explain code, at least providing a solid starting point for further iteration. There certainly are caveats and errors, and of course ChatGPT falls down whenever it’s asked questions that are outside its training data. For instance, good luck getting working examples on more recently updated libraries that have major changes to their API or library structure since 2021. As a former chemist, I can also confirm ChatGPT is … not optimized … for use in the chemical sciences. This New Yorker article also highlights its lack of arithmetic knowledge while providing a good analogy for how ChatGPT works.

But on balance, I’m still excited by the ability of these large language models to generate sensible, well-documented, and somewhat efficient code and then turn around and explain the code that was generated. Stitching together the outputs of several different libraries, even if they are commonly used together, is no simple task, and it would have taken me a couple of hours of research to throw together more poorly documented code to do the same thing.

ChatGPT generated the above article with working code in about 30 seconds.

The major risks I see with this tool are bias, intentional and unintinentional misinformation, and potential harm to the learning process. I’d like to spend some time here on that last point – detriment to learning and creativity – because I think this is a point that is most unique to these large language models compared to other data science tools.

What we miss when we skip reading documentation, experimenting with a Python objects methods and attributes, or simply investigating our data further is the foundational knowledge that is critical to nearly any project’s success. I’m not saying don’t use ChatGPT if you want to be successful in a given project, but I am saying don’t skip the research phase, however great the temptation. For instance, ChatGPT’s working code above misses potentially important parts of text preprocessing that one can only learn through the experience of examining others’ code and interrogating one’s own data. It also doesn’t deliver a user experience in the final plot that any human would enjoy. (It would be much more useful to see a name for the topic and the top N terms, rather than a meaningless topic number.)

Wrapping up

So go ahead – use ChatGPT to generate some portions of your code. It will save you time and effort. Just look out for mistakes, do your own homework, and figure out why the methods it implements work or don’t work. Strike a balance between sheer productivity and thoughtful creation. I promise you’ll be happy you did.